学萃讲坛”秉承学名家风范、萃科技精华的理念,以学术为魂,以育人为本,追求技术创新,提升学术品位,营造浓郁学术氛围,共品科技饕餮盛宴!

报告人:Dr. Weiyi Shang

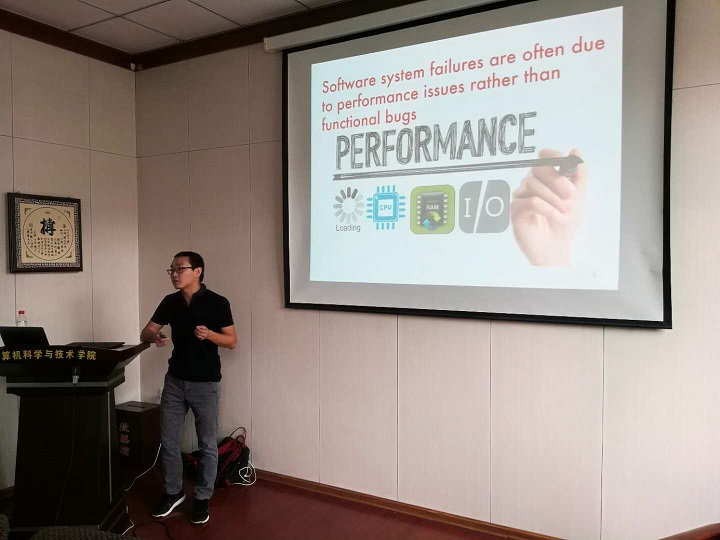

报告题目:Towards Just-in-time Mitigation of Performance Regression Introducing Changes

报告地点:21#426

报告时间:2018年8月27日10:00-12:00

主办单位:科学技术研究院

承办单位:必赢76net线路官网

报告人简介:Dr. Weiyi Shang is an Assistant Professor and Concordia University Research Chair in Ultra-large-scale Systems at the Department of Computer Science and Software Engineering at Concordia University, Montreal. He has received his Ph.D. and M.Sc. degrees from Queen’s University (Canada) and he obtained B.Eng. from Harbin Institute of Technology. His research interests include big data software engineering, software engineering for ultra-large-scale systems, software log mining, empirical software engineering, and software performance engineering. His work has been published at premier venues such as ICSE, FSE, ASE, ICSME, MSR and WCRE, as well as in major journals such as TSE, EMSE, JSS, JSEP and SCP. His work has won premium awards, such as SIGSOFT Distinguished paper award at ICSE 2013 and best paper award at WCRE 2011. His industrial experience includes helping improve quality and performance of ultra-large-scale systems in BlackBerry. Early tools and techniques developed by him are already integrated into products used by millions of users worldwide. Contact himat shang@encs.concordia.ca; http://users.encs.concordia.ca/~shang.

报告简介:Performance issues may compromise user experiences, increase the resources cost, and cause field failures. One of the most prevalent performance issues is performance regression. Due to the importance and challenges in performance regression detection, prior research proposes various automated approaches that detect performance regressions. However, the performance regression detection is conducted after the system is built and deployed. Hence, large amounts of resources are still required to locate and fix performance regressions. We conduct a statistically rigorous performance evaluation on 1,126 commits from ten releases of Hadoop and 135 commits from five releases of RxJava. We find that performance regressions widely exist during the development of both subject systems. By manually examining the issue reports that are associated with the identified performance regression introducing commits, we find that the majority of the performance regressions are introduced while fixing other bugs. In addition, we identify six root-causes of performance regressions.

In order to mitigate such performance regression introducing changes, we propose an approach that automatically predict whether a test would manifest performance regression in a code commit. In particular, we extract both traditional metrics and performance- related metrics from the code changes that are associated with each test. For each commit, we build random forests classifiers that are trained from all prior commits to predict in this commit whether each test would manifest performance regression. We conduct case studies on three open-source systems (Hadoop, Cassandra and Openjpa). Our results show that our approach can predict performance-regression-prone tests with high AUC values (on average 0.80). In addition, we build cost-aware modes that prioritize tests to detect performance regressions. Our prediction results are close to the optimal prioritization. Finally, we find that traditional size metrics are still the most important factors. Our approach and the study results can be leveraged by practitioners to effectively cope with performance regressions in a timely and proactive manner.